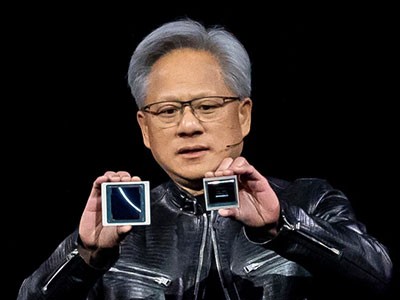

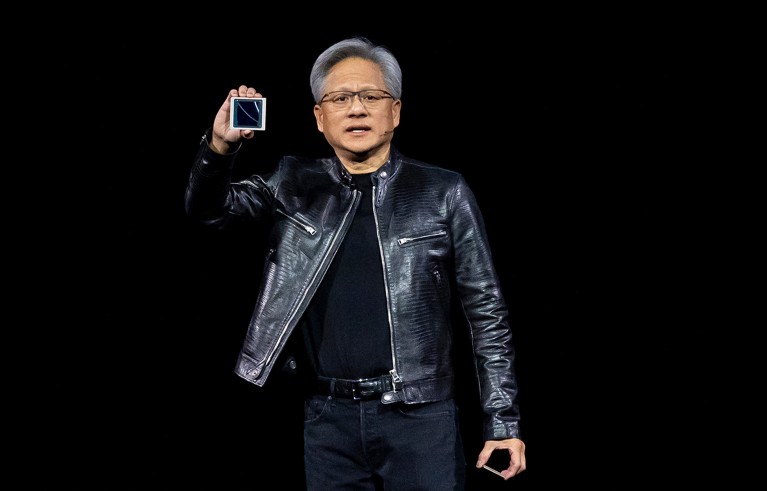

This March, an keen crowd of 12,000 folks stuffed a stadium in San Jose, California. “I hope you notice this isn’t a live performance,” joked Jensen Huang, chief government of chip-making firm Nvidia in close by Santa Clara.

For the subsequent half an hour, Huang prepped the gang to listen to the newest information about graphics processing items (GPUs), his firm’s signature laptop chip, which has been key to artificial-intelligence (AI) advances over the previous decade. Huang held up the corporate’s 2022 mannequin, the Hopper ‘superchip’. “Hopper modified the world,” he mentioned. Then, after a dramatic pause, he revealed one other shiny black rectangle in regards to the dimension of a Publish-it be aware: “That is Blackwell.” The gang cheered.

Again in 2022, Hopper beat the competitors in each class — from picture classification to speech recognition — on MLPerf, a battery of checks generally known as the olympics of AI. As quickly because it hit the market, Hopper grew to become the go-to chip for corporations seeking to supercharge their AI. Now Nvidia guarantees that Blackwell might be, for sure issues, a number of occasions sooner than its predecessor. “I believe Blackwell goes to take us to this subsequent stage of efficiency via a mixture of extra horsepower and in addition how the chips are speaking with one another,” says Dave Salvator, director of product advertising in Nvidia’s Accelerated Computing Group.

Whereas hopes and issues swirl across the affect of AI, the marketplace for AI chips continues to develop. Nvidia at present provides greater than 80% of them; in 2023, it bought 550,000 Hopper chips. Costing at the least US$30,000 every, the {powerful} chips went to knowledge centres, quite than private computer systems. This 12 months, the corporate’s market worth skyrocketed to greater than $2 trillion, making it the third-highest-valued firm on the earth, forward of giants similar to Amazon and Alphabet, the dad or mum firm of Google.

Nvidia’s chief government, Jensen Huang, holds up the expertise agency’s new Blackwell graphics processing unit.Credit score: David Paul Morris/Bloomberg/Getty

Nvidia’s Blackwell chip is a part of a wave of {hardware} developments, ensuing from corporations attempting laborious to maintain tempo with, and help, the AI revolution. Over the previous decade, a lot of the development in AI has come not a lot from intelligent coding methods, as from the easy precept that larger is best. Massive language fashions have more and more been educated on ever bigger knowledge units, requiring ever extra computing energy. By some estimates, US agency OpenAI’s newest mannequin, GPT-4, took 100 occasions extra computing energy to coach than did its predecessor.

Corporations similar to Meta have constructed knowledge centres that depend on Nvidia GPUs. Others, together with Google and IBM, together with a plethora of smaller corporations, have designed their very own AI chips; Meta is now working by itself, too. In the meantime, researchers are experimenting with a variety of chip designs, together with some optimized to work on smaller units. As AI strikes past cloud-computing centres and into cell units, “I don’t assume GPUs are sufficient any extra,” says Cristina Silvano, a pc engineer on the Polytechnic College of Milan in Italy.

These chips all have one thing in frequent: varied methods, together with computing in parallel, extra accessible reminiscence and numerical shorthand, that assist them to beat the velocity obstacles of typical computing.

Chip change

A lot of the deep-learning revolution of the previous decade may be credited to a departure from the standard workhorse of computing: the central processing unit (CPU).

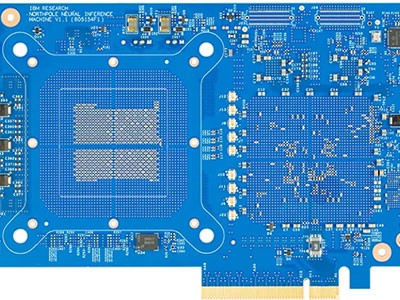

‘Thoughts-blowing’ IBM chip hurries up AI

A CPU is basically a tiny order-following machine. “It mainly seems at an instruction and says, ‘What does this inform me to do?’,” says Vaughn Betz, a pc engineer on the College of Toronto in Canada. On the most elementary stage, a CPU executes directions by flipping transistors, easy electrical switches that signify ‘1’ as on and ‘0’ as off. With simply this binary operation, transistors can carry out extremely complicated calculations.

The ability and effectivity of a CPU relies upon primarily on the dimensions of its transistors — smaller transistors flip sooner, and may be packed extra densely on a chip. Right now, essentially the most superior transistors measure a mere 45 × 20 nanometres, not a lot larger than their atomic constructing blocks. High-of-the-line CPUs pack greater than 100 million transistors right into a sq. millimetre and might carry out a couple of trillion flops (floating level operations per second).

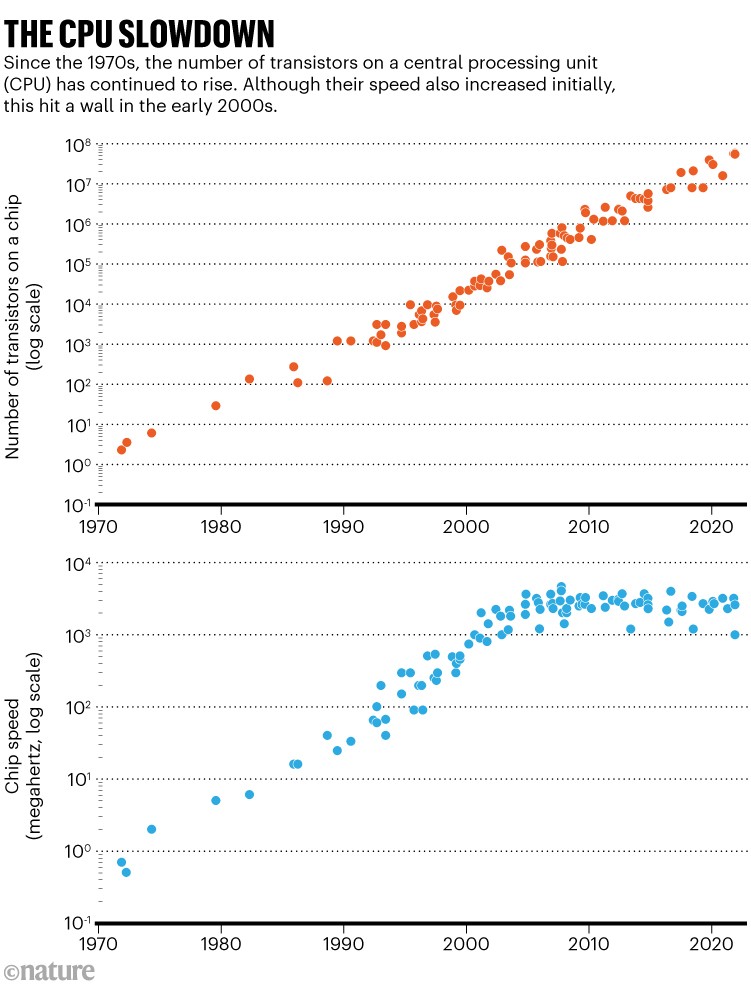

CPUs have improved exponentially because the Seventies. As transistors shrank, their density on a chip doubled each two years (a pattern referred to as Moore’s legislation), and the smaller transistors grew to become sooner (on account of a pattern referred to as Dennard scaling). Progress in CPUs was so fast that it made custom-designing different kinds of chip pointless. “By the point you designed a particular circuit, the CPU was already two occasions sooner,” says Jason Cong, a pc engineer on the College of California, Los Angeles. However round 2005, smaller transistors stopped getting sooner, and up to now few years, engineers grew to become involved that they couldn’t make transistors a lot smaller, because the units began butting up in opposition to the basic legal guidelines of physics (see ‘The CPU slowdown’).

Supply: Karl Rupp

The slowdown in CPU progress led laptop engineers to significantly take into account different kinds of chip. Early variations of GPUs had been round because the late Seventies, designed to do repetitive calculations for video gaming, similar to rendering the color of pixels on the display screen as rapidly as attainable. Whereas CPUs course of directions sequentially, GPUs course of extra directions in parallel.

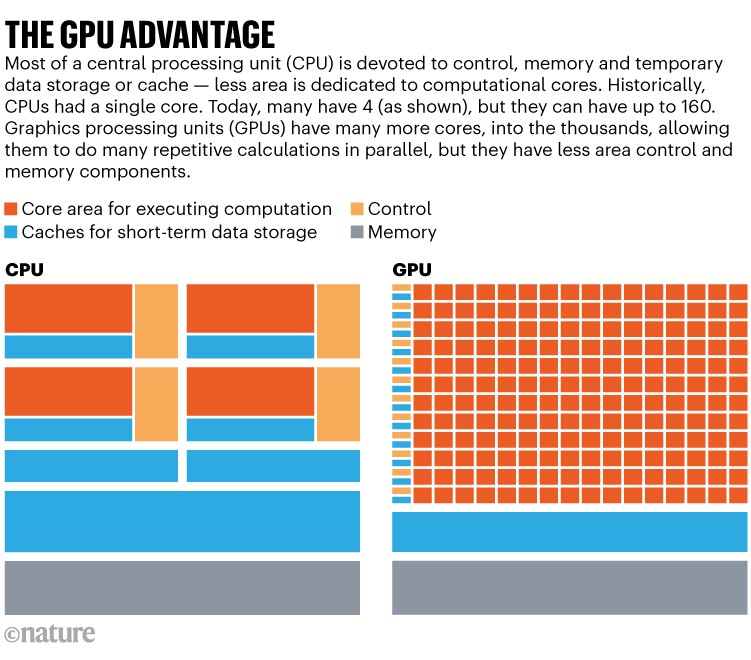

Usually, CPUs have just a few {powerful} ‘cores’ during which calculations are performed. Every of those particular person processing items receives directions and is supported by a number of caches that retailer knowledge within the quick time period. This structure makes CPUs very best for complicated computations. GPUs, in contrast, have a whole lot or 1000’s of smaller cores, every supported by fewer ancillary methods, similar to caches (see ‘The GPU benefit’). Having many smaller cores permits GPUs to do many easy, repetitive calculations in parallel a lot sooner than can a CPU. (This totally different method to calculating for GPUs entails totally different laptop code. Salvator factors out that Nvidia has twice as many engineers engaged on code because it does on {hardware}.)

Supply: Cornell College

In 2012, Geoffrey Hinton, a pc scientist on the College of Toronto and one of many early advocates of neural networks — algorithms impressed by the mind — challenged his then scholar Alex Krizhevsky to win the annual ImageNet competitors, with the purpose of coaching a pc to appropriately establish photographs of on a regular basis objects. On the time, packages utilizing CPUs have been managing 75% accuracy, at finest. Krizhevsky realized {that a} neural-net AI educated utilizing GPUs may do higher, on condition that the bread and butter of machine studying is easy, repetitive calculations.

Krizhevsky and his collaborators1 used two GPUs to coach their neural community, referred to as AlexNet. Their AI had 60 million parameters (inside variables that AI fashions use to make predictions), which on the time was unprecedented. AlexNet stomped on the competitors, scoring 85% accuracy and stunning the world with its capacity to reliably distinguish between comparable photographs, similar to these of leopards and jaguars. In a 12 months or two, each ImageNet entrant was utilizing GPUs; since then, AI researchers have leant closely on these chips.

Though GPUs, like CPUs, are nonetheless sure by the constraints of transistors, their capacity to compute in parallel has allowed them to speed up AI duties. To coach the giant language mannequin GPT-3, which has 175 billion parameters, researchers at OpenAI needed to run 1,024 GPUs for a month straight, which price a number of million {dollars}. In complete, these GPUs carried out 1023 flops. The identical coaching would have taken a whole lot to 1000’s of occasions longer on comparable CPUs. “With extra computation, you may prepare an even bigger community, they usually began getting lots higher,” Betz says. GPT-4, for instance, launched in March 2023, has an astonishing 1.8 trillion parameters, a tenfold enhance over its predecessor.

Though GPUs have been central to the AI revolution, they aren’t the one present on the town. As AI functions have proliferated, so too have AI chips.

Chipping in

Typically there isn’t sufficient time to feed directions right into a chip. Subject-programmable gate arrays (FPGAs) are designed so a pc engineer can program the chip’s circuits to observe particular orders in lieu of directions. “The place a chip like a CPU or GPU should look ahead to exterior directions, an FPGA simply does it,” Betz says.

Who’s making chips for AI? Chinese language producers lag behind US tech giants

For Cong, an FPGA is “like a field of Legos”. An engineer can construct an FPGA circuit by circuit into any design they’ll think about, whether or not it’s for a washing-machine sensor or AI to information a self-driving car. Nonetheless, in contrast with AI chips which have non-adjustable circuits, similar to GPUs, FPGAs may be slower and fewer environment friendly. Corporations together with Altera — a subsidiary of Intel in San Jose — market FPGAs for quite a lot of AI functions, together with medical imaging, and researchers have discovered them helpful for area of interest duties, similar to dealing with knowledge at particle colliders. The straightforward programmability of FPGAs additionally makes them helpful for prototyping, Silvano says. She typically designs AI chips utilizing FPGAs earlier than she makes an attempt the laborious strategy of fabricating them.

Silvano additionally works on a class of a lot smaller AI chips, boosting their computational effectivity in order that they’ll enhance cell units. Though it could be good to easily put a full GPU on a cell phone, she says, vitality prices and worth make that prohibitive. Slimmed-down AI chips can help the telephone’s CPU by dealing with the tedious duties of AI functions, similar to picture recognition, with out counting on sending knowledge to the cloud.

Maybe essentially the most laborious job AI chips have is multiplying numbers. In 2010, Google had an issue: the corporate needed to do voice transcription for an enormous variety of day by day customers. Coaching an AI to deal with it mechanically would have required, amongst different tough duties, multiplying numerous numbers. “If we have been simply utilizing CPUs, we’d’ve needed to double our server fleet,” says Norm Jouppi, a pc engineer at Google. “In order that didn’t sound notably interesting.” As a substitute, Jouppi helped to steer the event of a brand new form of chip, the tensor processing unit (TPU), as a platform for Google’s AI.

The TPU was designed particularly for the arithmetic that underpins AI. When the TPU is given one instruction, as a substitute of performing one operation, it might probably carry out greater than 100,000. (The TPU’s mathematical multitasking is a results of specifically designed circuitry and software program; today, many GPUs created with AI functions in thoughts, similar to Blackwell, have comparable capabilities.) The flexibility to execute an unlimited variety of operations with solely a restricted want to attend for directions allowed Google to speed up lots of its AI tasks, not simply its voice-transcription service.

To additional velocity up calculations, many AI chips, similar to TPUs and GPUs, use a form of digital shorthand. CPUs usually hold observe of numbers in 64-bit format — that’s 64 slots for a 0 or a 1, all of that are wanted to signify any given quantity. Utilizing a knowledge format with fewer bits can scale back the precision of calculations, so generic chips follow 64.

AI & robotics briefing: Lack of transparency surrounds Neuralink’s ‘brain-reading’ chip

But when you will get away with much less specificity, “{hardware} might be less complicated, smaller, decrease energy”, Betz says. For instance, itemizing a DNA sequence, in precept, requires solely a 2-bit format as a result of the genetic info has 4 prospects: the bases A, T, G or C (represented as 00, 01, 10 or 11). An FPGA that Cong designed2 to align genomic knowledge utilizing a 2-bit format was 28 occasions sooner than a comparable CPU utilizing a 64-bit format. To hurry up machine-learning calculations, engineers have lowered the precision of chips; TPUs depend on a specialised 16-bit format. For the newest era of chips, similar to Blackwell, customers may even select from a variety of codecs, 64-bit to 4-bit — whichever most accurately fits the calculation that must be performed.

Chips ahoy

AI chips are additionally designed to keep away from remembering an excessive amount of. Ferrying knowledge forwards and backwards between the microprocessor, during which calculations are performed, and wherever the reminiscence is saved may be extraordinarily time-consuming and energy-intensive. To fight this drawback, many GPUs have big quantities of reminiscence wired straight on a single chip — Nvidia’s Blackwell has about 200 gigabytes. When AI chips are put in in a server, they’ll additionally share reminiscence to make networking between particular person chips simpler and require much less electrical energy. Google has related practically 9,000 TPUs in a ‘pod’.

Electrical energy utilization — the burden of shifting so many electrons via circuits — is not any minor concern. Actual numbers may be laborious to come back by, however coaching GPT-3 is estimated to have consumed 1,300 megawatt hours (MWh) of electrical energy3. (The typical family in the UK consumes about 3 MWh yearly.) Even after coaching, utilizing AI functions may be an vitality sink. Though advances in chip design can enhance effectivity, the vitality prices of AI are persevering with to extend year-on-year as fashions get bigger4.

The rise of AI chips similar to GPUs doesn’t spell the top for CPUs. Moderately, the traces between even the essential sorts of chip are blurring — fashionable CPUs are higher at parallel computations than the sooner variations have been, and GPUs have gotten extra versatile than they was once. “Everyone steals from one another’s playbook,” Betz says. A model of Nvidia’s Blackwell chip pairs the GPU straight with a CPU; essentially the most {powerful} supercomputer on the earth, Frontier at Oak Ridge Nationwide Laboratory in Tennessee, depends on a mixture of CPUs and GPUs.

Given the velocity of adjustments up to now decade, researchers say it’s tough to foretell the way forward for chips — it’d embrace optical chips that use mild as a substitute of electrons5,6 or quantum computing chips. Within the meantime, some say that making the general public extra conscious of the AI {hardware} may assist to demystify the sector and proper the misperception that AI is omnipotent. “You may higher talk to people who AI isn’t any form of magic,” says Silvano.

On the root, it’s all simply wires and electrons — unlikely to take over the world, says Jouppi. “You may simply unplug it,” he says. He’s hopeful that the {hardware} will proceed to enhance, which in flip will assist to speed up AI functions in science. “I’m actually enthusiastic about that,” says Jouppi, “and I’m enthusiastic about what the longer term’s gonna convey.”