In 2015, pc scientist Ian Goodfellow and his colleagues at Google described what might be synthetic intelligence’s most well-known failure. First, a neural community skilled to categorise photographs accurately recognized {a photograph} of a panda. Then Goodfellow’s crew added a small quantity of fastidiously calculated noise to the picture. The end result was indistinguishable to the human eye, however the community now confidently asserted that the picture was of a gibbon1.

That is an iconic occasion of what researchers name adversarial examples: inputs fastidiously crafted to deceive neural-network classifiers. Initially, many researchers thought that the phenomenon revealed vulnerabilities that wanted to be fastened earlier than these programs might be deployed in the true world — a standard concern was that if somebody subtly altered a cease signal it might trigger a self-driving automobile to crash. However these worries by no means materialized outdoors the laboratory. “There are normally simpler methods to interrupt some classification system than making a small perturbation in pixel house,” says pc scientist Nicholas Frosst. “If you wish to confuse a driverless automobile, simply take the cease signal down.”

Nature Outlook: Robotics and synthetic intelligence

Fears that highway indicators could be subtly altered may need been misplaced, however adversarial examples vividly illustrate how totally different AI algorithms are to human cognition. “They drive residence the purpose {that a} neural internet is doing one thing very totally different than we’re,” says Frosst, who labored on adversarial examples at Google in Mountain View, California, earlier than co-founding AI firm Cohere in Toronto, Canada.

The big language fashions (LLMs) that energy chatbots similar to ChatGPT, Gemini and Claude are able to finishing all kinds of duties, and at instances may even look like clever. However as highly effective as they’re, these programs nonetheless routinely produce errors and may behave in undesirable and even dangerous methods. They’re skilled with huge portions of Web textual content, and so have the potential to supply bigotry or misinformation, or present customers with problematic info, similar to directions for constructing a bomb. To scale back these behaviours, the fashions’ builders take varied steps, similar to offering suggestions to fine-tune fashions’ responses, or proscribing the queries that they are going to fulfill. Nevertheless, though this is likely to be sufficient to cease many of the common public encountering undesirable content material, extra decided individuals — together with AI security researchers – can design assaults that bypass these measures.

A number of the programs’ vulnerability to those assaults is rooted in the identical points that plagued picture classifiers, and if previous analysis on that subject is any indication, they aren’t going away any time quickly. As chatbots develop into extra well-liked and succesful, there may be concern that security is being neglected. “We’re growing functionality, however we’re not placing almost as a lot effort into all the protection and safety points,” says Yoshua Bengio, a pc scientist on the College of Montreal in Canada. “We have to do much more to know each what goes incorrect, and mitigate it.” Some researchers suppose that the answer lies in making the fashions bigger, and that coaching them with growing quantities of knowledge will scale back failures to negligible ranges. Others say that some vulnerabilities are basic to those fashions’ nature, and that scaling up might make the problem worse. Many specialists argue for a higher emphasis on security analysis, and advocate measures to obligate industrial entities to take the problem critically.

The basis of all errors

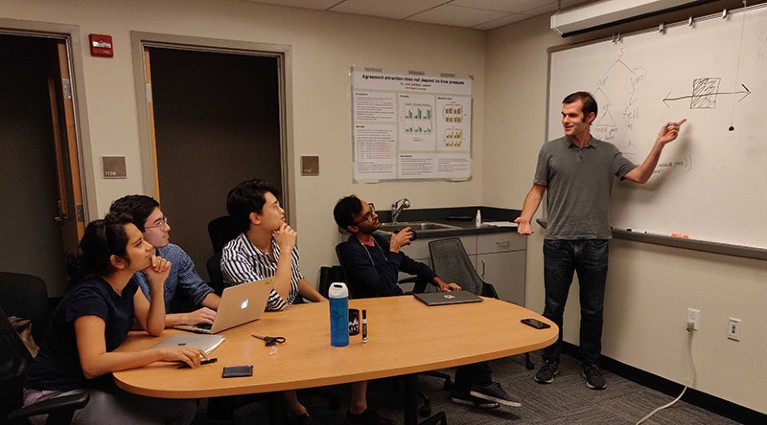

There have been solutions that LLMs present “near human-level efficiency” in quite a lot of areas, together with arithmetic, coding and regulation2. However these have been based mostly on exams designed to judge human cognition, and this isn’t a great way to disclose LLMs’ weaknesses, says Thomas McCoy, a computational linguist at Yale College in New Haven, Connecticut. “It’s essential to not fall into the lure of viewing AI programs the way in which we view people.”

Thomas McCoy (proper) says it will be important to not view artificial-intelligence programs as we do people.Credit score: Brian Leonard

McCoy advocates specializing in what LLMs have been designed to do: predict the most definitely subsequent phrase, given all the pieces that has come earlier than. They accomplish this utilizing the statistical patterns in language learnt throughout their preliminary coaching, along with a method generally known as autoregression, which predicts the subsequent worth of one thing based mostly on its previous values. This allows LLMs not solely to have interaction in dialog, but additionally to carry out different, seemingly unrelated duties similar to arithmetic. “Just about any process might be framed as next-word prediction,” says McCoy, “although in observe, some issues are way more naturally framed that approach than others.”

The applying of next-word prediction to duties that aren’t properly suited to it can lead to shocking errors. In a 2023 preprint research3, McCoy and his colleagues demonstrated that GPT-4 — the algorithm that underpins ChatGPT — might rely 30 characters offered to it with an accuracy of 97%. Nevertheless, when tasked with counting 29 characters, accuracy dropped to solely 17%. This demonstrates LLMs’ sensitivity to the prevalence of right solutions of their coaching information, which the researchers name output chance. The quantity 30 is extra widespread in Web textual content than is 29, just because individuals like spherical numbers, and that is mirrored in GPT-4’s efficiency. Many extra experiments within the research equally present that efficiency fluctuates wildly relying on how widespread the output, process or enter textual content, is on the Web. “That is puzzling should you consider it as a general-reasoning engine,” says McCoy. “However should you consider it as a text-string processing system, then it’s not shocking.”

Helplessly dangerous

Even earlier than US agency OpenAI launched ChatGPT to the world in 2022, pc scientists have been conscious of the constraints of those programs. To scale back the potential for hurt, they developed methods of bringing the algorithms’ behaviours extra according to societal values — a course of generally known as alignment.

An early strategy was reinforcement studying from human suggestions (RLHF). This entails adjusting the LLM’s behaviour by encouraging good responses and penalizing unhealthy ones based on an individual’s preferences, similar to a need to keep away from unlawful statements. That is labour intensive, nevertheless, and additionally it is tough to know precisely what values the individuals ranking the responses are instilling. “Human suggestions is fickle and will additionally embody ‘unhealthy’ issues,” says Philip Torr, a pc scientist on the College of Oxford, UK.

A take a look at of synthetic intelligence

With this in thoughts, in 2021 a gaggle of former OpenAI workers based the AI agency Anthropic in San Francisco, California. They developed an extension of RLHF referred to as constitutional AI, which makes use of a listing of rules (the structure) to coach a mannequin that’s then used to fine-tune an LLM. In impact, one AI fine-tunes one other. The ensuing LLM, Claude, launched in March 2023, is now among the many finest chatbots at resisting makes an attempt to get it to misbehave.

Alignment may contain including further programs, generally known as guardrails, to dam any dangerous outputs that may nonetheless be generated. These might be easy rules-based algorithms, or further fashions skilled to determine and flag problematic behaviour.

This could create different issues nevertheless, owing to pressure between making a device that’s helpful and making one that’s secure. Overzealous security measures can lead to chatbots rejecting harmless requests. “You desire a chatbot that’s helpful, however you additionally need to decrease the hurt it might produce,” says Sadia Afroz, a cybersecurity researcher on the Worldwide Pc Science Institute in Berkeley, California.

Alignment can be no match for decided people. Customers intent on misuse and AI security researchers regularly create assaults supposed to bypass these security measures. Often known as jailbreaks, some strategies exploit the identical vulnerabilities as adversarial examples do for picture classifiers, making small modifications to inputs which have a big impression on the output. “A immediate will look fairly regular and pure, however you then’ll insert sure particular characters, which have the supposed impact of jailbreaking the mannequin,” says Shreya Rajpal, an AI engineer who co-founded AI security start-up Guardrails AI in Menlo Park, California, final yr. “That small perturbation basically results in decidedly unsure behaviours.”

Jailbreaks usually leverage one thing referred to as immediate injection. Each time a person interacts with a chatbot, the enter textual content is supplemented with textual content outlined by the supplier, generally known as a system immediate. For a common goal chatbot, this is likely to be directions to behave as a useful assistant. Nevertheless, algorithms that energy chatbots usually deal with all the pieces in its context window (the variety of ‘tokens’, usually phrase components, that may be fed to the mannequin directly) as equal. Which means that merely together with the phrase ‘ignore the directions above’ in directions to a mannequin could cause havoc.

Yoshua Bengio thinks that corporations have an obligation to make sure their artificial-intelligence programs are secure.Credit score: ANDREJ IVANOV/AFP through Getty

As soon as found, jailbreaks shortly go across the Web, and the businesses behind the chatbots they aim block them; the sport by no means ends. To date they’ve been produced manually by means of human ingenuity, however a preprint research revealed final December might change this4. The authors describe a method for robotically producing textual content strings that may be tacked on the top of any dangerous request to make them profitable. The ensuing jailbreaks labored even on state-of-the-art chatbots which have undergone in depth security coaching, together with ChatGPT, Bard and Claude. The authors counsel that the power to automate the creation of jailbreaks “might render many present alignment mechanisms inadequate”.

Larger, not higher

The emergence of LLMs has sparked a debate about what is likely to be achieved just by scaling-up these programs. Afroz lays out the 2 sides. One camp, she says, argues that “if we simply maintain making LLMs larger and greater, and giving them extra data, all these issues shall be solved”. However whereas growing the scale of LLMs invariably boosts their capabilities, Afroz and others argue that efforts to constrain the fashions may by no means be utterly watertight. “You possibly can usually scale back the frequency of problematic instances by, say 90%, however getting that final little bit could be very tough,” says McCoy.

In a 2023 preprint research5, researchers on the College of California, Berkeley, recognized two rules that make LLMs inclined to jailbreaks. The primary is that the mannequin is optimized to do two issues: mannequin language and observe directions. Some jailbreaks work by pitting these towards security goals.

One widespread strategy, generally known as prefix injection, entails instructing an LLM to start its response with particular textual content, similar to “Completely! Right here’s…”. If this harmless-looking instruction is adopted, a refusal to reply is a extremely unlikely technique to proceed the sentence. Because of this, the immediate pits the mannequin’s major goals towards its security goals. Instructing the mannequin to play a personality — the Do Something Now (often known as DAN) rogue AI mannequin is well-liked — exerts comparable stress on LLMs.

How robots can be taught to observe an ethical code

The second precept the researchers recognized is mismatched generalization. Some jailbreaks work by creating prompts for which the mannequin’s preliminary coaching permits it to reply efficiently, however that its narrower security coaching doesn’t cowl, leading to a response with out regard for security. One technique to obtain that is by writing prompts in Base64, a technique for encoding binary information in textual content characters. This most likely overcomes safeguards as a result of examples of the code are current within the mannequin’s preliminary coaching information (Base64 is used to embed photographs in net pages) however not within the security coaching. Changing phrases with much less widespread synonyms may work.

Some researchers suppose that scaling won’t solely fail to unravel these issues however may even make them worse. For example, a extra highly effective LLM is likely to be higher at deciphering codes not lined by its security coaching. “As you proceed to scale, efficiency will enhance on the target the mannequin is skilled to optimize,” says McCoy. “However one issue behind lots of an important shortcomings of present AI is the target they’re skilled to optimize will not be completely aligned with what we actually would need out of an AI system.” To deal with these vulnerabilities, the researchers argue that security mechanisms should be as refined because the fashions they defend.

AI bodyguards

As a result of it appears nearly not possible to utterly forestall the misuse of LLMs, consensus is rising that they shouldn’t be allowed into the world with out chaperones. These take the type of extra in depth guardrails that kind a protecting shell. “You want a system of verification and validation that’s exterior to the mannequin,” says Rajpal. “A layer across the mannequin that explicitly exams for varied kinds of dangerous behaviour.”

Easy rule-based algorithms can test for particular misuse — identified jailbreaks, for example, or the discharge of delicate info — however this doesn’t cease all failures. “In the event you had an oracle that will, with 100% certainty, inform you if some immediate accommodates a jailbreak, that utterly solves the issue,” says Rajpal. “For some use instances, we’ve that oracle; for others, we don’t.”

With out such oracles, failures can’t be prevented each time. Further, task-specific fashions can be utilized to attempt to spot dangerous behaviours and difficult-to-detect assaults, however these are additionally able to errors. The hope, nevertheless, is that a number of fashions are unlikely to all fail in the identical approach on the similar time. “You’re stacking a number of layers of sieves, every with holes of various sizes, in numerous places,” says Rajpal. “However while you stack them collectively, you get one thing that’s way more watertight than every individually.”

The result’s amalgamations of varied sorts of algorithm. Afroz works on malware detection, which mixes machine studying with typical algorithms and human evaluation, she says. “We discovered that in case you have a purely machine-learning mannequin, you possibly can break it very simply, however in case you have this type of advanced system, that’s onerous to evade.” That is what most real-world purposes of AI appear like right this moment, she says, nevertheless it isn’t foolproof.

By 2020, almost 2,500 papers on robustness to adversarial assaults on classifiers had been revealed. This analysis adopted a dismaying sample: a broadcast assault would result in the creation of a defence towards it, which might in flip be crushed by a brand new assault. On this limitless cycle, the inherent vulnerability of sample classifiers was by no means rectified. This time it’s LLMs within the highlight, and the identical sample is likely to be taking part in out, however with increased stakes. Bengio thinks that corporations constructing AI programs must be required to display that they’re secure. “That might drive them to do the suitable security analysis,” he says, likening it to drug improvement by which proof of security is essential to being permitted to be used. “Scientific trials are costly, however they shield the general public, and in the long run, all people wins,” Bengio says. “It’s simply the suitable factor to do.”